as published in HPCwire.

“What’s the size of the AI market?” It’s a totally normal question for anyone to ask me. After all, I’m an analyst, and my company, Intersect360 Research, specializes in scalable, high-performance datacenter segments, such as AI, HPC, and Hyperscale. And yet, a simple answer eludes me, and I wind up answering a question with a question. “Which parts do you want to count?”

If you just want the biggest number you can justify—and indeed, that’s what many people want—we can develop a pretty big “market” for AI. Every smartphone and tablet has some form of inferencing built in. So do most new cars. The retail and banking industries are being transformed, as are both agriculture and energy. What part of the global economy isn’t part of the AI market writ large?

But if you’re asking me, that’s probably (hopefully) not what you had in mind. Let’s eliminate all the cars, phones, and smart thermostats, as well as the downstream influence of the revenue that comes from AI-generated sources, and just talk about the data center.

We should get finer still. Soon, any enterprise server might do inferencing at some level, even if it is simply serving email, streaming videos, or processing transactions. We’re looking for scalable AI on multiple nodes, with specific technology choices to accommodate those workloads. Moreover, there is probably an intentional budget categorization within the buying organization that indicates AI is done here with this. (With these guideposts, it starts getting easy to see similarities to the HPC market.)

Okay, now you want the size of the “AI market”? Easy. We just need to know which parts you want to count.

Don’t roll your eyes at me like that. Let me outline a few segments, and you’ll see what I mean:

- Hyperscale. The largest segment buying AI infrastructure that is strictly for AI, by far, is the hyperscale segment. You might think of these as “cloud” companies. If you do, you do them a disservice. Cloud serving is one line of business a hyperscale company might pursue, but consider: Is there more to Amazon than AWS? More to Microsoft than Azure? More to Alphabet than Google Cloud? Apple has a consumer cloud, iCloud, but is far bigger than that. And what about Meta, which isn’t a cloud company at all? These companies have enormous investments in AI for their own purposes; cloud be damned, to analyze the universes of personal and enterprise data they possess. And yet, they may be difficult to target directly with a traditional sales approach for AI solutions.

- HPC-using organizations. If you let go of the idea that the infrastructure has to be used exclusively for AI, the biggest AI market segment is the HPC market. Most HPC-using organizations (over 80% in recent Intersect360 Research surveys) are extending their HPC environments to incorporate AI. The Frontier supercomputer at Oak Ridge National Laboratory certainly does AI; it seems to be part of the AI market. The same reasoning applies to a major supercomputer at an oil and gas company, jam-packed with GPUs to add machine learning to its workloads, alongside seismic processing and reservoir simulation. Most of these systems would have existed without AI, but HPC budgets are up, and HPC configurations are evolving because of AI.

- Non-HPC enterprise. This segment is the Pure AI market, representing true net-new spending in enterprise AI, and it’s what most people are thinking of when they ask about the AI market. But even this segment bifurcates because the majority of spending on enterprise AI projects here goes to the cloud. This situation means there is spending on AI, but the infrastructure itself is back on the hyperscale side. Sometimes, the spending is non-cloud, whether traditionally on-premises or via some form of co-location or services agreement. This last piece is growing very fast right now but is relatively small compared to the rest.

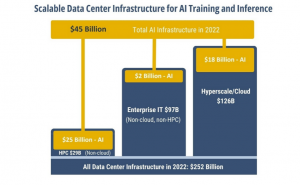

Let’s take these three segments together and see what the “AI market” looked like in 2022.

$45 billion! That’s a good size for what can reasonably be called the “AI market” for scalable data center infrastructure. That’s about 18% of all data center infrastructure spending in 2022. Only a small portion of the “Enterprise AI” segment is all the rage.

Wait, wait, before you tear it down on social media, please remember the following three qualifications:

- The above chart shows spending in 2022. There is explosive growth in 2023, which is still in progress. The middle bar—non-cloud, non-HPC enterprise AI, will have the highest percentage growth rate this year. The Hyperscale column will add the most total value, especially with new AI-focused clouds like CoreWeave and Lambda entering the picture.

- This total counts infrastructure, not cloud spending. HPC and non-HPC enterprises combined to spend $10 billion on cloud computing in 2022, most of which was for AI-specific workloads.

- Many “enterprises” are also doing HPC. Companies with HPC budgets that expand into AI are counted in the first column, not the second. That includes manufacturing, finance, energy, healthcare, and pharma examples—all frequently cited as enterprise AI use cases.

Now, let’s return to that first question you asked me: “What’s the size of the AI market?” I think you meant to ask me, “What’s the size of the AI market opportunity?” The parts you want to count depend on what kind of technology or solution you’re selling.

For example, a vendor of processing elements targeting AI, whether from the market leader, Nvidia, or any challenger, can reasonably look at the entire $45 billion with only a few caveats. Organizations that intend to serve both traditional HPC and new AI in the same infrastructure will tend toward fewer GPUs per node on average since most HPC applications are not optimized to use more than two GPUs per node. Hyperscale companies have massive buildouts but are hard to target with anything like a normal sales approach, and the major ones all have their own in-house processors as competitors.

The most important dynamic to realize is that while the AI market is large and fast-growing, the stereotypical enterprise AI use case is more idealized than it is representative of the market, like Margot Robbie as Stereotypical Barbie. It’s not that no companies fit the mold, but most of them are more complicated than the basic version.

Are we talking about training or inference? Is the data on-prem, cloud, or hybrid? Where is the computing? How much retraining, and where is that? And is AI the only thing this infrastructure is used for, or does it also perform some other non-AI computation? Ask these questions, and you quickly discover how rarely AI is stereotypical.

Finally, it cannot be stressed enough how much of the market is tied to hyperscale companies, either directly for their own AI initiatives or indirectly through cloud computing. This model challenges the go-to-market strategy of every company, from startups to giants, with an AI solution.

Growth markets are generally attractive because it’s easy to find a customer, and with confidence, you can find another next year. AI is taking off, no doubt. It is changing how we live and work, and it deserves the attention it’s getting. It’s also complicated, and it’s still early in the game.

Opportunities in AI abound, but they might not be what you think they are, and they’re rarely basic. If everyone chases the stereotypical enterprise AI use case, we may find that the relatively few idealized examples are over-competed relative to the size of the opportunity, no matter how fast it’s growing.

But for everyone who read the headline and the first sentence, scanned for the chart, glanced at Barbie, and skipped to the end without reading any of the middle parts…

The AI infrastructure market was $45 billion in 2022, with super-high growth forecast for this year. So simple.